Hun yuan Video 1.5 User Guide

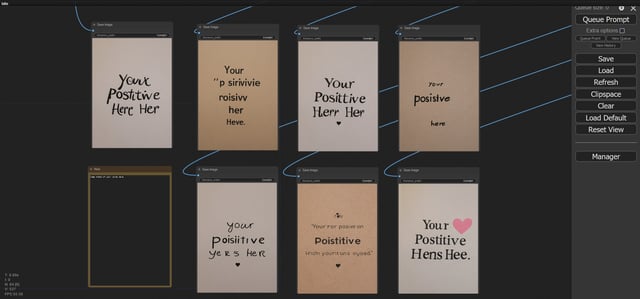

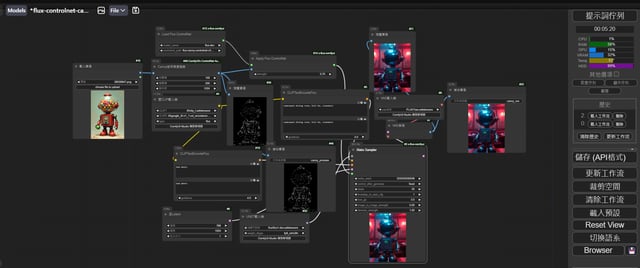

This guide will walk you through how to fully harness Hun yuan Video 1.5 on TensorArt from the basics of text2video and image2video, all the way to advanced control over style, mood, camera movement, and lighting. Even without relying on external prompt rewriting tools, you’ll learn how to write high level prompts that produce high quality, cinematic results and unlock your full creative potential.Basic FeaturesText-to-VideoOverview: Simply input a text description and the model will generate a matching video. For better control over the output, we strongly recommend using structured prompts. Just like professional creators, you can combine multiple “key elements” to shape the result.Core Formula: Prompt = Subject + Action/Motion + Scene + [Shot Size] + [Camera Movement] + [Lighting] + [Style] + [Mood]Items in brackets [ ] are optional, and you can freely mix and match them depending on your creative goals.Basic Usage: Subject + Action + SceneAdvanced Usage: Add more control tags as needed, e.g.: Subject + Action + Scene + Style + Camera Movement + LightingPrompt Examples:A mushroom grew out of the grass.Image-to-VideoOverview: Upload one image + a text prompt, and the model will generate a video starting from your image. The first frame is taken directly from the uploaded image, while the subsequent frames evolve according to the text instructions you provide.Core Formula: Prompt = Subject Motion + Scene Motion + [Camera Movement]Items in brackets [ ] are optional and can be added for more cinematic control.Prompt Examples:The girl in the scene slowly raises her head, her gaze fixed on the upper right of the frame. The camera follows her gaze, gradually revealing a Rococo-style window with a frame adorned with intricate carvings and gold lines, the glass reflecting the soft light from inside. The girl's headscarf and earrings sway slightly as she moves, and the edge of her collar wrinkles subtly with her movements.Advanced ControlsStyle ControlYou can guide the overall visual style of the generated video by adding style-related keywords to your prompt.Realistic / Cinematic StyleA tired middle-aged Asian man, wearing a pilling gray sweater, with fine wrinkles at the corners of his eyes, looks worriedly out the window. Cinematic lighting, realist style.Animation/Painting StyleThis low-poly 3D animation features a gigantic, geometrically shaped whale swimming slowly through an underwater world composed of sharply defined corals and seaweed. Crystal-like bubbles rise around it, and soft beams of sunlight pierce the water's surface, creating ever-changing patches of light that illuminate the entire scene. The upward-looking perspective showcases the ocean's depth and grandeur, creating a tranquil atmosphere imbued with geometric aesthetics.Lighting ControlCore Principle:Light is the soul of atmosphere. If you know how to describe lighting, you gain control over the emotional tone of the entire video.Common Techniques for Describing Lighting:Lighting Style: (e.g., soft light, hard light, neon lighting)Light Direction: (e.g., top-down lighting, side lighting)Light Quality: (e.g., diffused/soft, harsh, spotlight)Shadow Details: (e.g., deep shadows, soft gradients, high contrast shadows)Color Temperature: (e.g., warm golden-hour tones, cool daylight, sunset glow)Reflections: (e.g., reflective highlights on water, glass, or metal surfaces)Silhouettes & Outlines: (e.g., backlit subjects creating dramatic silhouettes or rim lighting)Examples:A detective answers a phone call in a smoke-filled office. Afternoon sunlight filters through the blinds, casting sharp, parallel streaks of light on him and the opposite wall. As he moves, the light and shadow constantly cut across the screen, creating a cinematic sense of destiny.Camera Movement ControlBy adding standard camera-movement keywords to your prompts, you can significantly enhance the cinematic quality of your generated videos.Below is a reference library of commonly used camera-movement terms:Reference Camera Movement LibraryExamples:Text2Video:A professional freestyle skier, dressed in a futuristic fluorescent ski suit, completes a jump in mid-air and lands atop a ski jump in a snow park. The backdrop is a snow-covered mountain and sky tinged with pink and purple by the sunset. Using a panoramic lens, the camera slowly pans around him in a 360-degree arc, capturing his body's rotation and posture from all angles. The lighting is backlighting at sunset, outlining him and the swirling snowflakes with a dreamlike golden contour. The overall effect is cinematic high-definition, in the style of an extreme sports commercial advertisement, creating an atmosphere of transcendence, pushing limits, and showcasing the beauty of human potential.Image2Video:The camera follows a girl riding a motorcycle, her hands gripping the handlebars tightly, her body leaning forward as the motorcycle speeds forward, its wheels kicking up dust. Huge cacti flank the road on the right side of the frame, disappearing into the background. The camera then slowly pulls back, the girl and motorcycle gradually shrinking in size, with a convoy of trucks following closely behind on the dusty road behind them.Bilingual Text Rendering Inside VideoHun yuanVideo 1.5 is capable of generating clear, high-quality text directly within the video frames, supporting both Chinese and English.How to use: Include the text you want to render inside quotation marks in your prompt.For Chinese prompts: use Chinese quotation marks — “ ”For English prompts: use English quotation marks — " "This ensures the model correctly recognizes and renders the exact text you specify.Image2Video:The camera focuses on a woman in a white shirt, standing quietly in the center. Suddenly, she begins to breakdance, her body swaying rhythmically to the silent beat. Her arms swing, her steps are light, and her short hair sways gently with the dance. Then, the words "Hello, World" appear in the upper left corner of the screen.Additional Advanced Controls & NotesSupported LanguagesThe model currently supports Chinese and English prompts.Video Aspect RatiosHun yuanVideo 1.5 supports multiple aspect ratios, including: 16:9 (landscape), 4:3, 1:1 (square), 3:4, and 9:16 (portrait). Please select the desired aspect ratio before generating.Keep It SimpleUse clear, direct vocabulary and straightforward sentence structures whenever possible.Prompt Component BreakdownMore Creative Use Cases & ExamplesStrong Instruction ResponsivenessHun yuanVideo 1.5 natively supports long-form Chinese and English prompts, enabling it to understand and interpret complex semantic structures—such as lighting, composition, spatial layout, and more. It automatically maps these semantic details to video parameters, allowing for:Continuous camera movementsIn-frame text renderingCombined or sequential actionsMulti-instruction generation with high accuracyThis makes it possible to create highly controlled, cinematic videos using only natural language.Example:The hiker begins walking forward along the trail, causing the water bottle to swing rhythmically with each step. The camera gradually pulls back and rises to reveal a vast desert landscape stretching out ahead, while the sun position shifts from afternoon to dusk, casting increasingly longer shadows across the terrain as the figure becomes smaller in the frame.Smooth & Natural Motion GenerationHun yuanVideo 1.5 produces smooth, physically coherent movements for both characters and objects. Motion remains natural and distortion-free, even in fast-paced shots or highly dynamic scenes.Example: A cake-man sits on a chair. Then, he reaches down and breaks off a piece of cake from his own leg, causing a few crumbs to fall as a visible chunk goes missing from the leg. Next, he lifts the broken piece toward his mouth, opens his mouth, and takes a bite, chewing a few times. The table and the wall in the background remain completely still.Realistic Physics at PlayGenerate fluid, natural phenomena and rigid physical interactions with pinpoint accuracy, bringing your scenes to life with immersive realism and dynamic energy.Example:The video captures a basketball going through the hoop. The subject is the orange ball. Initially, it arcs through the air. Then, it passes through the net without touching the rim (swish). Next, the white net whips up violently. The background is the blurred crowd. The camera shoots from a low angle under the basket. The lighting is focused arena lighting. The overall video presents a satisfying moment style.Cross-Dimensional GenerationHun yuanVideo 1.5 enables seamless cross-dimensional creation, bringing virtual characters and elements—like cartoon figures or special effects—into real-world scenes. The model precisely interprets complex semantics, lighting, and material textures, ensuring virtual elements integrate naturally with reality for a fully immersive experience.Action Logic & BreakdownHun yuanVideo 1.5 supports action decomposition, allowing you to generate complex movements by describing the subject’s motion in discrete states and leveraging precise visual cues.Core Formula: Prompt = Scene Setup + Temporal Action Breakdown + Key DetailsExample: Static overhead shot of a printed photo of a tree trunk lying on a wooden table.Action Sequence:1、A real human hand enters, places a single pinecone on the paper next to the tree hole, and exits immediately.2、A realistic 3D squirrel emerges from the 2D hole in the photo. The squirrel comes out empty-handed.3、The squirrel sniffs the pinecone sitting on the paper, looks curious, blinks, and tilts its head.4、The squirrel reaches out, grabs that specific pinecone from the table.Key details: Seamless interaction between real world and photo, surreal VFX, squirrel paws are empty initially, heavy weight perception on the pinecone.